AI enthusiasts, your prayers have been heard.

OpenAI is jumping back into the open tech AI arena with plans to release a powerful open-weight language model with reasoning abilities in the coming months, CEO Sam Altman said on Monday.

"We are planning to release our first open-weight language model since GPT-2," Altman wrote in a post on X. "We've been thinking about this for a long time, but other priorities took precedence. Now it feels important to do."

TL;DR: we are excited to release a powerful new open-weight language model with reasoning in the coming months, and we want to talk to devs about how to make it maximally useful: https://t.co/XKB4XxjREV

we are excited to make this a very, very good model!

__

we are planning to…

— Sam Altman (@sama) March 31, 2025

The announcement comes as OpenAI faces growing competition from rivals that include Meta and its Llama family of open-source models; Google’s Gemma, which comes with multimodal capabilities; and Chinese AI lab DeepSeek, which recently released an open-source reasoning model that reportedly outperformed OpenAI's o1.

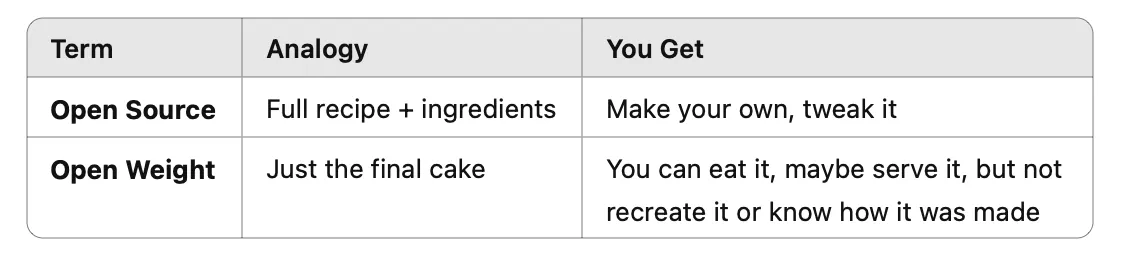

A model is open source when its developer shares with the public everything about it— users have access to the code, training dataset, and architecture, among other things.

That gives users the ability to modify and redistribute the model. An open-weight model is less open: users have the ability to fine-tune it, but cannot build it from scratch because they don’t have access to key elements such as the training dataset or the architecture.

To gather input on what developers actually want, OpenAI published a feedback form on its website and announced plans for developer events starting in San Francisco within weeks, followed by sessions in Europe and the Asia-Pacific regions.

"We’re excited to collaborate with developers, researchers, and the broader community to gather inputs and make this model as useful as possible," the company said in its announcement.

Steven Heidel, who works on the API team at OpenAI, also shared that this model would be able to run locally: "We're releasing a model this year that you can run on your own hardware."

He didn’t specify how many parameters it would have, the token context window, the dataset, techniques used in the training, or the release license, which could restrict actions like reverse engineering or fine-tuning in specific countries, for example.

The announcement today marks a significant departure from OpenAI's recent strategy of keeping its most advanced models locked behind APIs.

It also aligns with Altman's recent comments during a Reddit Q&A, where he first shared that the company was pondering the idea of releasing a full open-source model.

"Yes, we are discussing (releasing some model weights and publishing some research),” Altman wrote. “I personally think we have been on the wrong side of history here and need to figure out a different open source strategy; not everyone at OpenAI shares this view, and it's also not our current highest priority."

The upcoming model will feature reasoning capabilities comparable to OpenAI's o3-mini, according to Altman's post. This would make it the most capable open reasoning model to date, beating DeepSeek R1.

Edited by Sebastian Sinclair and Josh Quittner

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

1 day ago

38

1 day ago

38

English (US) ·

English (US) ·